Readable s-expressions for Lisp-based languages: Lots of progress!

Lots has been happening recently in my effort to make Lisp-based languages more readable. A lot of programming languages are Lisp-based, including Scheme, Common Lisp, emacs Lisp, Arc, Clojure, and so on. But many software developers reject these languages, at least in part because their basic notation (s-expressions) is very awkward.

The Readable Lisp s-expressions project has a set of potential solutions. We now have much more robust code (you can easily download, install, and use it, due to autoconfiscation), and we have a video that explains our solutions. The video on readable Lisp s-expressions is also available on Youtube.

We’re now at version 0.4. This version is very compatible with existing Lisp code; they are simply a set of additional abbreviations. There are three tiers: curly-infix expressions (which add infix), neoteric-expressions (which add a more conventional call format), and sweet-expressions (which deduce parentheses from indentation, reducing the number of required parentheses).

Here’s an example of (awkward) traditional s-expression format:

(define (factorial n)

(if (<= n 1)

1

(* n (factorial (- n 1)))))

Here’s the same thing, expressed using sweet-expressions:

define factorial(n)

if {n <= 1}

1

{n * factorial{n - 1}}

A sweet-expression reader could accept either format, actually, since these tiers are simply additional abbreviations and adjustments that you can make to an existing Lisp reader. If you’re interested, please go to the Readable Lisp s-expressions project web page for more information and an implementation - and please join us!

path: /misc | Current Weblog | permanent link to this entry

Release government-developed software as OSS

I encourage people to sign the white house petition to Maximize the public benefit of federal technology by sharing government-developed software under an open source license. I, at least, interpret this to include software developed by contractors (since they receive government funding). I think this proposal makes sense. Sure, some software is classified, or export-controlled, or for some other specific reason should not be released to the public. But those should be exceptions. If we the people paid to have it developed, then we the people should get it!

It is true that many petitions do not get action right away, but that isn’t taking the long view. Often an issue has to be repeatedly raised before anything happens. So just because something doesn’t happen once doesn’t mean it was a waste of time. The Consumer Financial Protection Bureau has a “default share” policy so it is possible.

path: /oss | Current Weblog | permanent link to this entry

FYI, opensource.com just posted an interview with me: “5 Questions with David A. Wheeler” by Melanie Chernoff, Opensource.com, 2012-07-17.

path: /oss | Current Weblog | permanent link to this entry

How to have a successful open source software (OSS) project: Internet Success

The world of the future belongs to the collaborators. But how, exactly, can you have a successful project with collaborators? Can we quantitatively analyze past projects to figure out what works, instead of just using our best guesses? The answer, thankfully, is yes.

I just finished reading the amazing

Internet Success: A Study of Open-Source Software Commons.

This landmark book by Charles M. Schweik and Robert C. English of the

Massachusetts Institute of Technology (MIT)

presents the results of five years of painstaking quantitative

research to answer this question:

“What factors lead some open source software (OSS) commons

(aka projects) to success, and others to abandonment?”

If you’re doing serious research in how collaborative development projects succeed (or not), you have to get this book. If you’re running a project, you should apply its results, and frankly, you’d probably get quite a bit of insight about collaboration from reading it. The book focuses specifically on the development of OSS, but as the authors note, many of its lessons probably apply elsewhere. Here’s a quick review and summary.

Schweik and English examined over 100,000 projects on SourceForge, using data from SourceForge and developer surveys. Their approach to data collection and analysis is spelled out in detail in the book; the key is that they took the time to deeply dive into it. Many previous studies have focused on just a few projects, and they summarize those; while those are useful, they don’t tell the whole story. Schweik and English instead cover a broad array of projects, using quantitative analysis instead of guesswork.

Fair warning: The book is quite technical. People who are not used to statistical analysis will find some parts quite mysterious, and they answer a lot of questions you might not even have thought to ask. Because this is serious scientific research, they carefully define terms, walk through a variety of data, and present an avalanche of data. The key, though, is that they managed to find useful answers from the data, and their results are actually quite understandable.

They spend a whole chapter (chapter 7) defining the terms “success” and “abandonment”. The definitions of these terms are key to the whole study, so it makes sense that they spend time to define them. Interestingly, they switched to the term “abandonment” instead of the more common term “failure”; they found that “many projects that had ceased collaborating would not be seen as failed projects”, e.g., because that project code had been absorbed into another project or the developer had improved their development skills (where this was their purpose).

They use a very simple project lifecycle model — projects begin in initiation, and once the project has made its first software release, it switches to growth. They also categorized projects as success, abandonment, or indeterminate. Combining these produces 6 categories of project: success initiation (SI); abandonment initiation (AI); success growth (SG); abandonment growth (AG); indeterminant initiation (II); and indeterminant growth (IG). Their operational definition of success initiation (SI) is oversimplified but easy to understand: an SI project has at least one release. Their operational definition for a success growth (SG) project is very generous: at least 3 releases, at least 6 months between releases, and has more than 10 downloads. Chapter 7 gives details on these; I note these here because it’s hard to follow most of the book without knowing these categories. I could argue that these are really too generous a definition of success, but even with those definitions, they had many projects which did not meet these definitions, and it is important to understand why (so that future projects would be more likely to succeed).

They had so much data that even supercomputers could not directly process it. Given today’s computing capabilities, that’s pretty amazing.

So, what did they learn? Quite a bit. A few specific points are described in chapter 12. For example, they had presumed that OSS projects with limited competition would be more successful, but the effect is actually mildly the other way; “successful growth (SG) projects are more frequently found in environments where there is more competition, not less”. Unsurprisingly, projects with financial backing are “much more likely to be successful than those that are not” once they are in growth stage; although financing had an effect, its effects were not as strong in initiation.

As with any research material, if you don’t have time for the details, it’s a good idea to jump to the conclusions, which in this book is chapter 13. So what does it say?

One of the key results is that during initiation (before first release), the following are the most important issues, in order of importance, for success in an OSS project:

None of these are earth-shattering surprises, but now they are confirmed by data instead of being merely guessed at. In particular, some items that people have claimed are important, such as keeping complexity low, were not really supported as important. In fact, successful projects tended to have a little more complexity. That is probably not because a project should strive for complexity. Instead, I suspect both successful and abandoned projects often strive to reduce complexity — so it not really something that distinguishes them — and I suspect sometimes a project that focuses on user needs has to have more complexity than one that does not, simply because user needs can sometimes require some complexity.

Similarly, they had guidance for growth projects, in order of importance:

The also have some hints of how potential OSS users (consumers) can choose OSS that is more likely to endure. Successful OSS projects have characteristics like more than 1000 downloads, users participating in bug tracker and email lists, goals/plans listed, a development team that responds quickly to questions, a good web site, good user documentation, and good developer documentation. A larger development team is a good sign, too.

These are just some of the research highlights. For details, well, get the book!

If you’re looking for more detailed guidance on how to run an OSS project, then a good place to go is “Producing Open Source Software: How to Run a Successful Free Software Project” by Karl Fogel. If you want to do it with or in the U.S. government, you might look at Open Technology Development (OTD): Lessons Learned & Best Practices for Military Software - OSD Report, May 2011 (full disclosure: I am co-author). Both of them were written before these research results were reported, but I think they are all quite consistent with each other.

I want to give some extra kudos to the authors: They have made a vast amount of their data avaiable so that analysis can be re-done, and so that additional analysis can be done. (They held back some survey data due to personally-identifying information issues, which is reasonable enough). Science depends on repeatability, yet much of today’s so-called “science” does not publish its data or analysis software, and thus cannot be repeated… and thus is not science.

The book is not perfect. It’s big and rather technical in some spots, which will make it hard reading for some. An unfortunate blot is that, while they’re usually extremely precise, there are serious ambiguities in their discussion on licensing. In particular, they have fundamentally inconsistent definitions for the term “GPL-compatible” and “GPL-incompatible” throughout the book, making their license analysis results suspect. On page 22, they define the term “GPL-incompatible” in an extremely bizarre and non-standard way; they define “GPL-incompatible” as software in which “firms can derive new works from OSS, but are not obliged to license new derivatives under the GPL [and] are not obligated to expose the code logic in [derivative products].” In short, they seem to using the term “GPL-compatible” as a synonym for what the rest of the world would call a “reciprocal” or “protective” license. Similarly, they seem to be defining the term “GPL-incompatible” to mean a “permissive” license. I don’t like non-standard terminology, but as long as unusual terms are defined clearly, I can deal with bizarre terminology. Yet later, on page 157, they define “GPL-compatible” completely differently, and give it its conventional meaning instead. That is, they define “GPL-compatible” as software that can be combined with the GPL (which includes not just the reciprocal GPL license, but which also includes many permissive licenses like the MIT license). My initial guess is that the page 22 text is just wrong, but it’s hard to be sure. There is another wrinkle, too, presuming that they meant the term “GPL-compatible” in the usual sense (and that page 22 is just wrong). One of the more popular licenses, the Apache License 2.0, has recently become GPL-compatible (on release of the GPL version 3), even though it wasn’t before. It’s not clear from the book that this is reflected in their data (at least I didn’t see it), if they actually used the term “GPL-compatible” in its usual sense, and there is enough Apache-licensed software that this would matter. This may just be a poor explanation of terms, but until this is cleared up, I would be cautious about its comments on licensing. Hopefully they will clear this up, and in addition, it would probably be very useful to re-run the licensing analysis to examine (1) GPL-compatible vs. GPL-incompatible, and (2) to examine the typical 3 license categories (permissive, weakly protective/reciprocal, and strongly protective/reciprocal).

So if you are interested in the latest research on how

OSS projects become successful (or not), pick up

Internet Success: A Study of Open-Source Software Commons.

This book is a milestone in the serious study of collaborative development

approaches.

What’s especially intriguing is that success is very achievable. While initiating your project you should keep at it and communicate (articulate the vision and goals, have a high-quality web site to showcase/promote the project, create good docuemntation, and advertize). Once it’s growing, work to attract more users and developers.

path: /oss | Current Weblog | permanent link to this entry

Antideficiency Act and the Apache License

Some people are claiming that the U.S. federal government law called the “antideficiency act” means that the U.S. government cannot use any software released under the Apache 2.0 license. This is nonsense, but it’s a good example of the nonsense that impedes government use and co-development of some great software. Here’s why this is nonsense.

First, I should note that in my earlier post, Open Source Software volunteers forbidden in government? (Antideficiency Act), I explained that the US government rule called the “antideficiency act” (ADA) doesn’t interfere with the government’s use of open source software (OSS), even if it is created by people who are “volunteers”. As long as the volunteers intend or agree that their work is gratuitous (no-charge), there’s no problem. The antideficiency act says that you can’t create a moral obligation to pay without Congress’ consent; the government can accept materials even if they are provided at no cost.

The GAO has a summary describing the Antideficiency Act (ADA), Pub.L. 97-258, 96 Stat. 923. It explains that the ADA prohibits “federal employees from:

Software licenses sometimes include indemnification clauses, and those clauses can run afoul of this act if the clauses require the government to grant a possibly unlimited future liability (or any liability not already appropriated). But some lawyers act as if the word “indemnification” is some kind of magic curse word. The word “indemnification” is not a magic word that makes a licenses automatically unacceptable for government use. As always, whether a license is okay or not depends on what the license actually says.

The license that seems to trigger problems in some lawyers is the Apache 2.0 license, a popular OSS license. Yet the Apache license version 2.0 does not require such broad indemnification. The Apache 2.0 license clause 9 (“Accepting Warranty or Additional Liability”) instead requires that a redistributor provide indemnification only when additional conditions are met - in this case, when the redistributor provides warranty or indemnification. Clause 9 says in full, “While redistributing the Work or Derivative Works thereof, You may choose to offer, and charge a fee for, acceptance of support, warranty, indemnity, or other liability obligations and/or rights consistent with this License. However, in accepting such obligations, You may act only on Your own behalf and on Your sole responsibility, not on behalf of any other Contributor, and only if You agree to indemnify, defend, and hold each Contributor harmless for any liability incurred by, or claims asserted against, such Contributor by reason of your accepting any such warranty or additional liability.”

In short (and to oversimplify), “if you indemnify your downstream (recipients), you have to indemnify your upstream (those you received it from)”. There is a reason for clauses like this; it helps counter some clever sheanigans by competitors who might want to harm a project. If a competitor set up a situation to legally protect that software’s users while legally exposing its developers to heightened risk, after a while there would be no developers. This clause prevents this. (This is yet another example of why you should reuse a widely-used OSS license instead of writing your own; most people would never have thought of this issue.)

It is extremely unlikely that any government agency would trigger this clause by warrantying the software or indemnifying a recipient, so it is quite unlikely that this clause would ever be triggered by government action. But in any case, it would be this later action, not mere acceptance of the Apache 2.0 license, that would potentially run afoul of the ADA. This is simply the same as usual; the government typically does not warranty or indemnify software it releases, and if it did, it would have to determine that value and lawfully receive funding to do it.

There’s an additional wrinkle on this stuff. The legal field, like the software field, is so large that many people specialize, and sometimes the right specialists don’t get involved. Reviewing software licenses is normally the domain of so-called “intellectual property” lawyers, who really should be called “data rights” lawyers. (I’ve commented elsewhere that the term “intellectual property” is dangerously misleading, but that is a different topic.) But I’ve been told that at least in some organizations, the people who really understand the antideficiency act are a different group of lawyers (e.g., those who specialize in finance). So if a data rights lawyer comes back with antideficiency act questions, find out if that lawyer is the right person to talk to; it may be that the question really should be forwarded to a lawyer who specializes in that instead.

Now I am no lawyer, and this blog post is not legal advice. Even if I were a lawyer, I am not your lawyer — specific facts can create weird situations. There is no formal ruling on this matter, either, more’s the pity. However, this conclusion that I’m describing has been previously reached by others, in particular, see “Army lawyers dismiss Apache license indemnification snafu”, Fierce Government IT, March 8, 2012. What’s more, other lawyers I’ve talked to have agreed that this makes sense. Basically, the word “indemification” is not a magic curse word when it is in a licence — you have to actually read the license, and then determine if it is a problem or not.

More broadly, this is (yet another) example of a misunderstanding in the U.S. federal government that impedes the use and collaborative development of open source software (aka OSS or FLOSS). I believe the U.S. federal government does not use or co-develop OSS to the extent that it should, and in some cases it is because of misunderstandings like this. So if this matters to you, spread the word — often rules that appear to be problems are not problems at all.

I’ve put this information in the MIL-OSS FAQ so others can find out about this.

path: /oss | Current Weblog | permanent link to this entry

Open Source Software volunteers forbidden in government? (Antideficiency Act)

Sometimes people ask me if open source software (OSS) is forbidden in the U.S. federal government due to a prohibition on “voluntary services”. Often they don’t even know exactly where this prohibition is in law, they just heard third-hand that there was some problem.

It turns out that there is no problem, as I will explain. Please spread the word to those who care! Even if this isn’t your specific problem, I think this question can provide some general lessons about how to deal with government laws and regulations that, on first reading, do not make sense.

The issue here is something called the “antideficiency act” (ADA), specifically the part of the ADA in 31 U.S.C. § 1342, Limitation on voluntary services. This statute says that, “An officer or employee of the United States Government or of the District of Columbia government may not accept voluntary services for either government or employ personal services exceeding that authorized by law except for emergencies involving the safety of human life or the protection of property…”

Now at first glance, this text could appear to forbid OSS. Historically, OSS was developed by volunteers, and a lot of OSS is still created by people who aren’t paid to write it. A lot of OSS developers are paid to write software today, often at a premium, but that doesn’t help either. After all, often the government is not the one paying for the development, so at first glance this still sounds like “volunteer” work. After all, the company who is paying is still “volunteering” the software to the government!

In fact, it’s even worse. It appears to forbid the government from working with volunteer organizations like the Red Cross. In fact, it becomes hard to imagine how the government can work with various non-government organizations (NGOs) — most depend greatly on volunteers!

But as is often the case, if there’s a government law or regulation that doesn’t make sense, you should dig deeper to find out what it actually means. Often there are court cases or official guidance documents that explain things, and often you’ll find out that the law or regulation means something very different than you might expect. I’ve found that in the US government (or in law), the problems are often caused because a key term doesn’t mean what you might expect it to mean. In this case, the word “voluntary” does not mean what you might think it means.

The US Government Accountability Office (GAO) Office of the General Counsel’s “Principles of Federal Appropriations Law” (aka the “Red Book”) explains federal appropriation law. Volume II of its third edition, section 6.C.3, describes in detail this prohibition on voluntary services. Section 6.C.3.a notes that the voluntary services provision is not new; it first appeared, in almost identical form, back in 1884. The red book explains its purpose; since “an agency cannot directly obligate in excess or advance of its appropriations, it should not be able to accomplish the same thing indirectly by accepting ostensibly ‘voluntary’ services and then presenting Congress with the bill, in the hope that Congress will recognize a ‘moral obligation’ to pay for the benefits conferred…”

The red book section 6.C.3.b states that in 1913, the Attorney General developed an opinion (30 Op. Att’y Gen. 51 (1913)) that “has become the leading case construing 31 U.S.C. § 1342… the Attorney General drew a distinction that the Comptroller of the Treasury thereafter adopted, and that GAO and the Justice Department continue to follow to this day: ”the distinction between ‘voluntary services’ and ‘gratuitous services.’” Some key text from this opinion, as identified by the red book, are: “[I]t seems plain that the words ‘voluntary service’ were not intended to be synonymous with ‘gratuitous service’ … it is evident that the evil at which Congress was aiming was not appointment or employment for authorized services without compensation, but the acceptance of unauthorized services not intended or agreed to be gratuitous and therefore likely to afford a basis for a future claim upon Congress… .” More recent decisions, such as the 1982 decision B-204326 by the U.S. Comptroller General, continue to confirm this distinction between “gratuitous” and “voluntary” service.

So here we have a word (“voluntary”) that has a very special meaning in these regulations that is different from its usual meaning. I expect that a lot of the problem is that this word dates from 1884; words their meaning change over time. And changing laws is hard; lawmakers rarely change a text just because it’s hard for ordinary people to understand.

In short, the ADA’s limitation on voluntary services does not broadly forbid the government from working with organizations and people who identify themselves as volunteers, including those who develop OSS. Instead, the ADA prohibits government employees from accepting services that are not intended or agreed to be given freely (gratuitous), but were instead rendered in the hope that Congress will subsequently recognize a moral obligation to pay for the benefits conferred. Services that are intended and agreed to be gratuitous do not conflict with this statute. In most cases, contributors to OSS projects intend for their contributions to be gratuitous, and provide them for all (not just for the Federal government), clearly distinguishing such OSS contributions from the “voluntary services” that the ADA was designed to prevent.

I’ve recorded this information on the MIL-OSS FAQ at http://mil-oss.org/learn-more/frequently-asked-questions-on-open-source-software-oss so that others can learn about this.

When you have questions about OSS and US federal government, good places for information/guidance include the following (the DoD-specific ones have information that may be useful elsewhere):

path: /oss | Current Weblog | permanent link to this entry

On June 7, 2012, 2-3pm Eastern Time, I’ll be speaking as part of the free webinar “Lessons Learned: Roadblocks and Opportunities for Open Source Software (OSS) in U.S. Government” hosted by GovLoop. The webinar will feature a recent Department of Homeland Security (DHS) Homeland Open Security Technology (HOST) report that I co-authored, which discusses key roadblocks and opportunities in the government application of open source software, as reported in interviews of experts, suppliers, and potential users. Join us!

path: /oss | Current Weblog | permanent link to this entry

Award, and learn how to develop secure software!

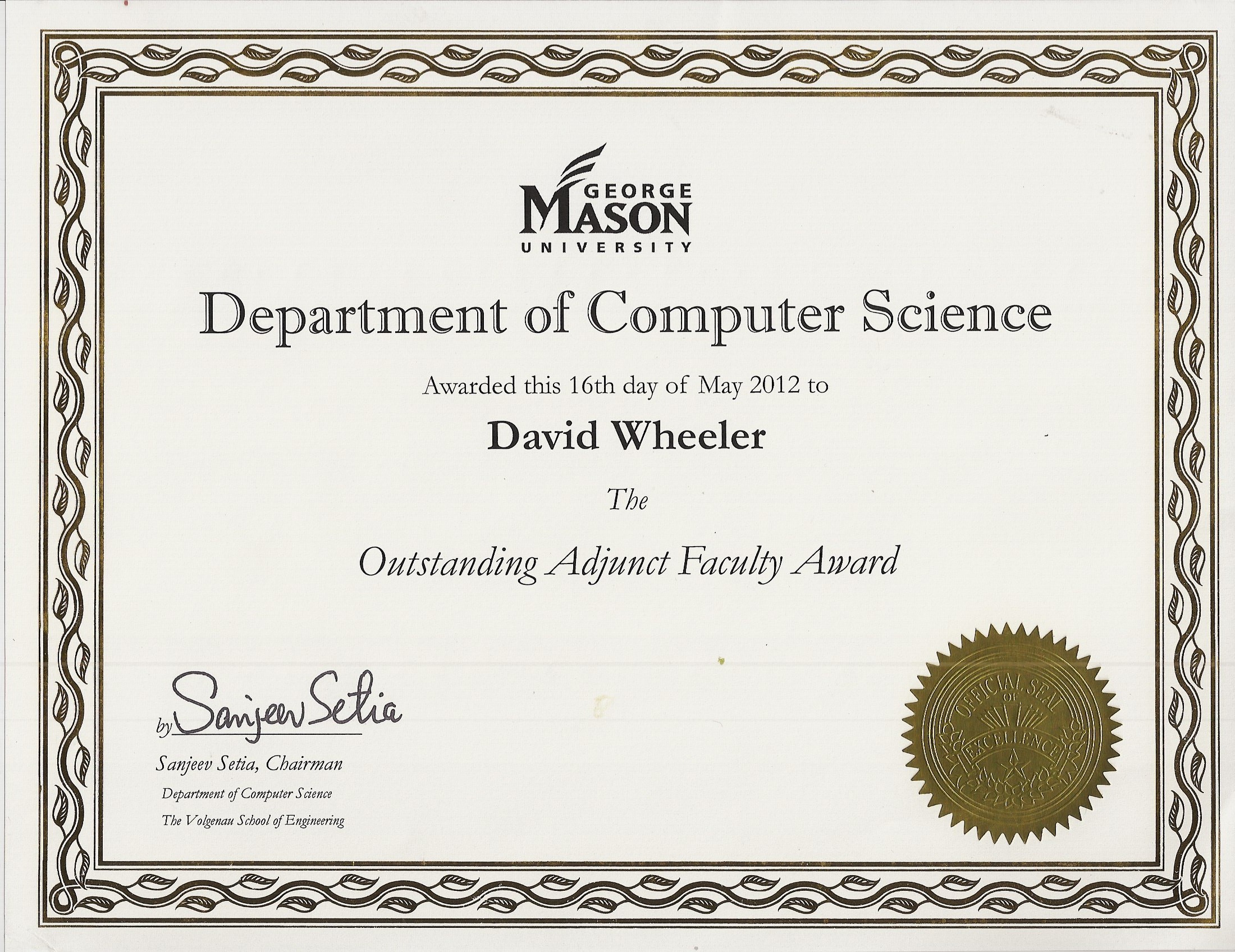

I just received an award from George Mason University (GMU) — thank you! I’m grateful, but I think this award means something bigger, too: Anyone developing software should learn how to develop secure software (you might even get a raise!). Here’s how I connect those seemingly unconnected points.

First, the award. I received the “outstanding adjunct faculty” award from GMU’s Department of Computer Science on May 16, 2012. This award is based on comments from both students and faculty. Thank you! Although it’s not the only class I’ve taught at GMU, I’m mainly known for teaching Secure Software Design and Programming (SWE-781/ISA-681). It was this work, teaching SWE-781/ISA-681, that was specifically cited in the award ceremony by Sanjeev Setia (chairman of the Computer Science department).

I have a passion about developing secure software. I believe that today’s software developers need to know how to develop secure software, because most of today’s programs routinely connect to a network or take data from one. If you’re a software developer, please consider taking a course that teaches you how to develop secure software (or take courses that embed that information in them). If your college/university doesn’t offer it, tell them that they need such material. And if you influence the selection of courses available at a college/university, please convince them to add it! I am delighted that George Mason University offers this course; I believe it is important.

Perhaps my favorite story from my class is that one of my students got a raise at work by applying the material he learned in class. Another student reported that he was asked at his work to present his school project and to help organize an effort to raise software security awareness. Here are some quotes from former students (using their name when they said it was okay):

I hope these reports will convince you that anyone developing software should learn how to developing secure software — such as by taking a course like this. People are reporting that this course was really valuable, and in some sense, I am receiving an award because this material is directly useful and important. If you’re at GMU, or considering it, by all means take my class! And again, if you influence the courses taught at a college or university, please make sure that they teach how to develop secure software in some way. The knowledge of how to make more secure programs exists; now we need to share it with the people who need it.

Oh, and

here is the certificate — my thanks to everyone who recommended me.

path: /security | Current Weblog | permanent link to this entry

In some

presentations I

include the “magic cookie parable”.

Here is the parable, for those who have not heard it

(I usually hold a cookie in my hand when I present it).

Anyway…

In some

presentations I

include the “magic cookie parable”.

Here is the parable, for those who have not heard it

(I usually hold a cookie in my hand when I present it).

Anyway…

I have in my hand… a magic cookie! Just one cookie will supply all your food needs for a whole year. What is more, the first one is only $1. Imagine how much money you will save! Imagine how much time you will save!

Ah, but there’s a catch. Once you eat the magic cookie, you can only eat magic cookies, as all other food will become poisonous to you. What’s more, there is only one manufacturer of magic cookies.

Do you think the cookie will be $1 next year? How about for the rest of your life? Are you as eager to eat the cookie?

Is that a silly parable? It should be. Yet many people accept information technology (IT), for themselves or on behalf of their organizations, that are fundamentally magic cookies. Too many are blinded into accepting technology that makes them, or their organization, completely at the mercy of a single supplier. You can call dependence on single supplier a security problem, or a supply chain problem, or a support problem, or many other things. But no matter what you call it, it is a serious problem.

Now please do not hear what I am not saying. I am not here to attack any particular supplier. In fact, we all need suppliers, and I am grateful for suppliers! The problem is not the existence of suppliers; the problem is excessive dependency on any one supplier.

There are only a few information technology (IT) strategies that counter sole-supplier dependency that I know of:

Before getting locked into a single supplier, count the true cost over the entire time it will occur. Sure, in some cases, it may be worth it anyway. But you may find that this true cost is far higher than you are willing to pay. (The cookie image is by Bob Smith, released under the CC Attribution 2.5 license. Thank you!)

path: /oss | Current Weblog | permanent link to this entry

DoD Open Source Software (OSS) Pages Moved

The US Department of Defense (DoD) has changed the URLs for some of its information on Open Source Software (OSS). Unfortunately, there are currently no redirects, and that makes them hard to find (sigh). Here are new links, if you want them.

A good place to start is the Department of Defense (DoD) Free Open Source Software (FOSS) Community of Interest page, hosted by the DoD Chief Information Officer (CIO).

From that page, you can reach:

If you are interested in the topic of DoD and OSS, you might also be interested in the Military Open Source Software (Mil-OSS) group, which is not a government organization, but is an active community.

path: /oss | Current Weblog | permanent link to this entry

Insecure open source software libraries?

The news is abuzz about a new report, “The Unfortunate Reality of Insecure Libraries” (by Aspect Security, in partnership with Sonatype). Some news articles about it, like Open source code libraries seen as rife with vulnerabilities (Network World) make it sound like open source software (OSS) is especially bad. (To be fair, they do not literally say that, but many readers might infer it.)

However, if you look at the report, you see something quite different. The report directly states that, “This paper is not a critique of open source libraries, and we caution against interpreting this analysis as such.” They only examined open source Java libraries, but their “experience in evaluating the security of hundreds of custom applications indicates that the findings are likely to apply to closed-source and commercial libraries as well.”

This is a valuable report, because it points out a general problem not specific to OSS.

The problem is that software libraries (OSS or not) are not being adequately managed, leading to a vast number of vulnerabilities. For example, the report states that “The data show that most organizations do not appear to have a strong process in place for ensuring that the libraries they rely upon are up-to-date and free from known vulnerabilities.” They point out that “development teams readily acknowledge, often with some level of embarrassment, that they make no efforts to keep their libraries up-to-date.” They also note that “Organizations download many old versions of libraries… If people were updating their libraries, we would have expected the popularity of older libraries to drop to zero within the first two years. However, the data clearly show popularity extending back over six years…. The continuing popularity of libraries for extended months suggests that incremental releases of legacy applications are not being updated to use the latest versions of libraries but are continuing to use older versions.” They recommend that software development organizations inventory, analyze, control, and monitor their libraries, and give details on each point.

I should note that I’ve been saying some of these things for years. For years I have said that you should evaluate OSS before you use it… some software is better than others. Back in 2008 I also urged developers to use system libraries, at least as an option; embedding libraries often leads over time to the use of old (and vulnerable) libraries. An advantage of OSS is that many people can review the software, find problems (including vulnerabilities), and fix them… but this advantage is lost if the fixed versions are not used! And of course, if you develop software, you need to learn how to develop secure software. As the report notes, tools can be useful (I give away flawfinder), but tools cannot replace human knowledge and human review.

For more information, you should see their actual report, “The Unfortunate Reality of Insecure Libraries” (by Aspect Security).

path: /oss | Current Weblog | permanent link to this entry

Software patents may silence little girl

Software patents are hurting the world, but the damage they do is often hard to explain and see.

But Dana Nieder’s post “Goliath v. David, AAC style” has put a face on the invisible scourge of software patents. As she puts it, a software patent has put her “daughter’s voice on the line. Literally. My daughter, Maya, will turn four in May and she can’t speak.” After many tries, the parents found a solution: A simple iPad application called “Speak for Yourself” that implements “augmentative and alternative communication” (AAC). Dana Nieder said, “My kid is learning how to ‘talk.’ It’s breathtaking.”

But now Speak for Yourself is being sued by a big company, Semantic Compaction Systems and Prentke Romich Company (SCS/PRC), who claims that the smaller Speak for Yourself is infringing SCS/PRC’s patents. If SCS/PRC wins their case, the likely outcome is that these small apps will completely disappear, eliminating the voice of countless children. The reason is simple: Money. SCS/PRC can make $9,000 by selling their one of their devices, so they have every incentive to eliminate software applications that cost only a few hundred dollars. Maya cannot even use the $9,000 device, and even if she could, it would be an incredible hardship on a Bronx family with income from a single 6th grade math teacher. In short, if SCS/PRC wins, they will take away the voice of this little girl, who is not yet even four, as well as countless others.

I took a quick look at the complaint, Semantic Compaction Systems, Inc. and Prentke Romich Company, v. Speak for Yourself LLC; Renee Collender, an individual; and Heidi Lostracco, an individual, and it is horrifying at several levels. Point 16 says that the key “invention” is this misleadingly complicated paragraph: “A dynamic keyboard includes a plurality of keys, each with an associated symbol, which are dynamically redefinable to provide access to higher level keyboards. Based on sequenced symbols of keys sequentially activated, certain dynamic categories and subcategories can be accessed and keys corresponding thereto dynamically redefined. Dynamically redefined keys can include embellished symbols and/or newly displayed symbols. These dynamically redefined keys can then provide the user with the ability to easily access both core and fringe vocabulary words in a speech synthesis system.”

Strip away the gobbledygook, and this is a patent for using pictures as menus and sub-menus. This is breathtakingly obvious, and was obvious long before this was patented. Indeed, it would have been obvious to most non-computer people. But this is the problem with many software patents; once software patents were allowed (for many years they were not, and they are still not allowed in many countries), it’s hard to figure out where to end.

One slight hope is that there is finally some effort to curb the worst abuses of the patent system. The Supreme Court decided on March 20, 2012, in Mayo v. Prometheus, that a patent must do more than simply state some law of nature and add the words “apply it.” This was a unanimous decision by the U.S. Supreme Court, remarkable and unusual in itself. You would think this would be obvious, but believe it or not, the lower court actually thought this was fine. We’ve gone through years where just about anything can be patented. By allowing software patents and business patents, the patent and trade office has become swamped with patent applications, often for obvious or already-implemented ideas. Other countries do not allow such abuse, by simply not allowing these kinds of patents in the first place, giving them time to review the rest. See my discussion about software patents for more.

My hope is that these patents are struck down, so that this 3-year-old girl will be allowed to keep her voice. Even better, let’s strike down all the software patents; that would give voice to millions.

path: /oss | Current Weblog | permanent link to this entry

Introduction to the autotools (autoconf, automake, libtool)

I’ve recently posted a video titled “Introduction to the autotools (autoconf, automake, and libtool)”. If you develop software, you might find this video useful. So, here’s a little background on it, for those who are interested.

The “autotools” are a set of programs for software developers that include at least autoconf, automake, and libtool. The autotools make it easier to create or distribute source code that (1) portably and automatically builds, (2) follows common build conventions (such as DESTDIR), and (3) provides automated dependency generation if you’re using C or C++. They’re primarily intended for Unix-like systems, but they can be used to build programs for Microsoft Windows too.

The autotools are not the only way to create source code releases that are easily built and packaged. Common and reasonable alternatives, depending on your circumstances, include Cmake, Apache Ant, and Apache Maven. But the autotools are one of the most widely-used such tools, especially for programs that use C or C++ (though they’re not limited to that). Even if you choose to not use them for projects you control, if you are a software developer, you are likely to encounter the autotools in programs you use or might want to modify.

Years ago, the autotools were hard for developers to use and they had lousy documentation. The autotools have significantly improved over the years. Unfortunately, there’s a lot of really obsolete documentation, along with a lot of obsolete complaints about autotools, and it’s a little hard to get started with them (in part due to all this obsolete documentation).

So, I have created a little video introduction at http://www.dwheeler.com/autotools that I hope will give people a hand. You can also view the video via YouTube (I had to split it into parts) as Introduction to the autotools, part 1, Introduction to the autotools, part 2, and Introduction to the autotools, part 3.

The entire video was created using free/libre / open source software (FLOSS) tools. I am releasing it in the royalty-free webm video format, under the Creative Commons CC-BY-SA license. I am posting it to my personal site using the HTML5 video tag, which should make it easy to use. Firefox and Chrome users can see it immediately; IE9 users can see it once they install a free webm driver. I tried to make sure that the audio was more than loud enough to hear, the terminal text was large enough to read, and that the quality of both is high; a video that cannot be seen or heard is rediculous.

This video tutorial emphasizes how to use the various autotools pieces together, instead of treating them as independent components, since that’s how most people will want to use them. I used a combination of slides (with some animations) and the command line to help make it clear. I even walk through some examples, showing how to do some things step by step (including using git with the autotools). This tutorial gives simple quoting rules that will prevent lots of mistakes, explains how to correctly create the “m4” subdirectory (which is recommended but not fully explained in many places), and discusses why and how to use a non-recursive make. It is merely an introduction, but hopefully it will be enough to help people get started if they want to use the autotools.

path: /oss | Current Weblog | permanent link to this entry

Debian GNU/Linux = $19 billion

Debian developer James Bromberger recently posted the interesting ”Debian Wheezy: US$19 Billion. Your price… FREE!”, where he explains why the newest Debian distribution (“Wheezy”) would have taken $19 billion U.S. dollars to develop if it had been developed as proprietary software. This post was picked up in the news article ”Perth coder finds new Debian ‘worth’ $18 billion” (by Liam Tung, IT News, February 14, 2012).

You can view this as an update of my More than a Gigabuck: Estimating GNU/Linux’s Size, since it uses my approach and even uses my tool sloccount. Anyone who says “open source software can’t scale to large systems” clearly isn’t paying attention.

path: /oss | Current Weblog | permanent link to this entry

New Hampshire: Open source, open standards, open data

The U.S. state of New Hampshire just passed act HB418 (2012), which requires state agencies to consider open source software, promotes the use of open data formats, and requires the commissioner of information technology (IT) to develop an open government data policy. Slashdot has a posted discussion about it. This looks really great, and it looks like a bill that other states might want to emulate. My congrats go to Seth Cohn (the primary author) and the many others who made this happen. In this post I’ll walk through some of its key points on open source software, open standards for data formats, and open government data.

First, here’s what it says about open source software (OSS): “For all software acquisitions, each state agency… shall… Consider whether proprietary or open source software offers the most cost effective software solution for the agency, based on consideration of all associated acquisition, support, maintenance, and training costs…”. Notice that this law does not mandate that the state government must always use OSS. Instead, it simply requires government agencies to consider OSS. You’d think this would be useless, but you’d be wrong. Fairly considering OSS is still remarkably hard to do in many government agencies, so having a law or regulation clearly declare this is very valuable. Yes, closed-minded people can claim they “considered” OSS and paper over their biases, but laws like this make it easier for OSS to get a fair hearing. The law defines “open source software” (OSS) in a way consistent with its usual technical definition, indeed, this law’s definition looks a lot like the free software definition. That’s a good thing; the impact of laws and regulations is often controlled by their definitions, so having good definitions (like this one for OSS) is really important. Here’s the New Hampshire definition of OSS, which I think is a good one:

The material on open standards for data says, “The commissioner shall assist state agencies in the purchase or creation of data processing devices or systems that comply with open standards for the accessing, storing, or transferring of data…” The definition is interesting, too; it defines an “open standard” as a specification “for the encoding and transfer of computer data” that meets a long list of requirements, including that it is “Is free for all to implement and use in perpetuity, with no royalty or fee” and that it “Has no restrictions on the use of data stored in the format”. The list is actually much longer; it’s clear that the authors were trying to counter common vendor tricks who try to create “open” standards that really aren’t. I think it would have been great if they had adopted the more stringent Digistan definition of open standard, but this is still a great step forward.

Finally, it talks about open government data, e.g., it requires that “The commissioner shall develop a statewide information policy based on the following principles of open government data”. This may be one of the most important parts of the bill, because it establishes these as the open data principles:

The official motto of the U.S. state of New Hampshire is “Live Free or Die”. Looks like they truly do mean to live free.

path: /oss | Current Weblog | permanent link to this entry

This website (www.dwheeler.com) was down part of the day yesterday due to a mistake made by my web hosting company. Sorry about that. It’s back up, obviously.

For those who are curious what happened, here’s the scoop. My hosting provider (WebHostGiant) moved my site to a new improved computer. By itself, that’s great. That new computer has a different IP address (the old one was 207.55.250.19, the new one is 208.86.184.80). That’d be fine too, except they didn’t tell me that they were changing my site’s IP address, nor did they forward the old IP address. The mistake is that the web hosting company should have notified me of this change, ahead of time, but they failed to do so. As a result, I didn’t change my site’s DNS entries (which I control) to point to its new location; I didn’t even know that I should, or what the new values would be. My provider didn’t even warn me ahead of time that anything like this was going to happen… if they had, I could have at least changed the DNS timeouts so the changeover would have been quick.

Now to their credit, once I put in a trouble ticket (#350465), Alex Prokhorenko (of WebhostGIANT Support Services) responded promptly, and explained what happened so clearly that it was easy for me to fix things. I appreciate that they’re upgrading the server hardware, I understand that IP addresses sometimes much change, and I appreciate their low prices. In fact, I’ve been generally happy with them.

But if you’re a hosting provider, you need to tell the customer if some change you make will make your customer’s entire site unavailable without the customer taking some action! A simple email ahead-of-time would have eliminated the whole problem.

Grumble grumble.

I did post a rant against SOPA and PIPA the day before, but I’m quite confident that this outage was unrelated.

Anyway, I’m back up.

path: /misc | Current Weblog | permanent link to this entry

Please protest the proposed STOP (Stop Online Piracy Act) and PIPA (PROTECT IP Act). The English Wikipedia is blacked out today, and many other websites (like Google) are trying to awareness of these hideous proposed laws. The EFF has more information about PIPA and SOPA. Yes, the U.S. House has temporarily suspended its work, but that is just temporary; it needs to be clear that such egregious laws must never be accepted.

Wikimedia Foundation board member Kat Walsh puts it very well: “We [the Wikimedia Foundation and its project participants] depend on a legal infrastructure that makes it possible for us to operate. And we depend on a legal infrastructure that also allows other sites to host user-contributed material, both information and expression. For the most part, Wikimedia projects are organizing and summarizing and collecting the world’s knowledge. We’re putting it in context, and showing people how to make sense of it. But that knowledge has to be published somewhere for anyone to find and use it. Where it can be censored without due process, it hurts the speaker, the public, and Wikimedia. Where you can only speak if you have sufficient resources to fight legal challenges, or, if your views are pre-approved by someone who does, the same narrow set of ideas already popular will continue to be all anyone has meaningful access to.”

path: /oss | Current Weblog | permanent link to this entry